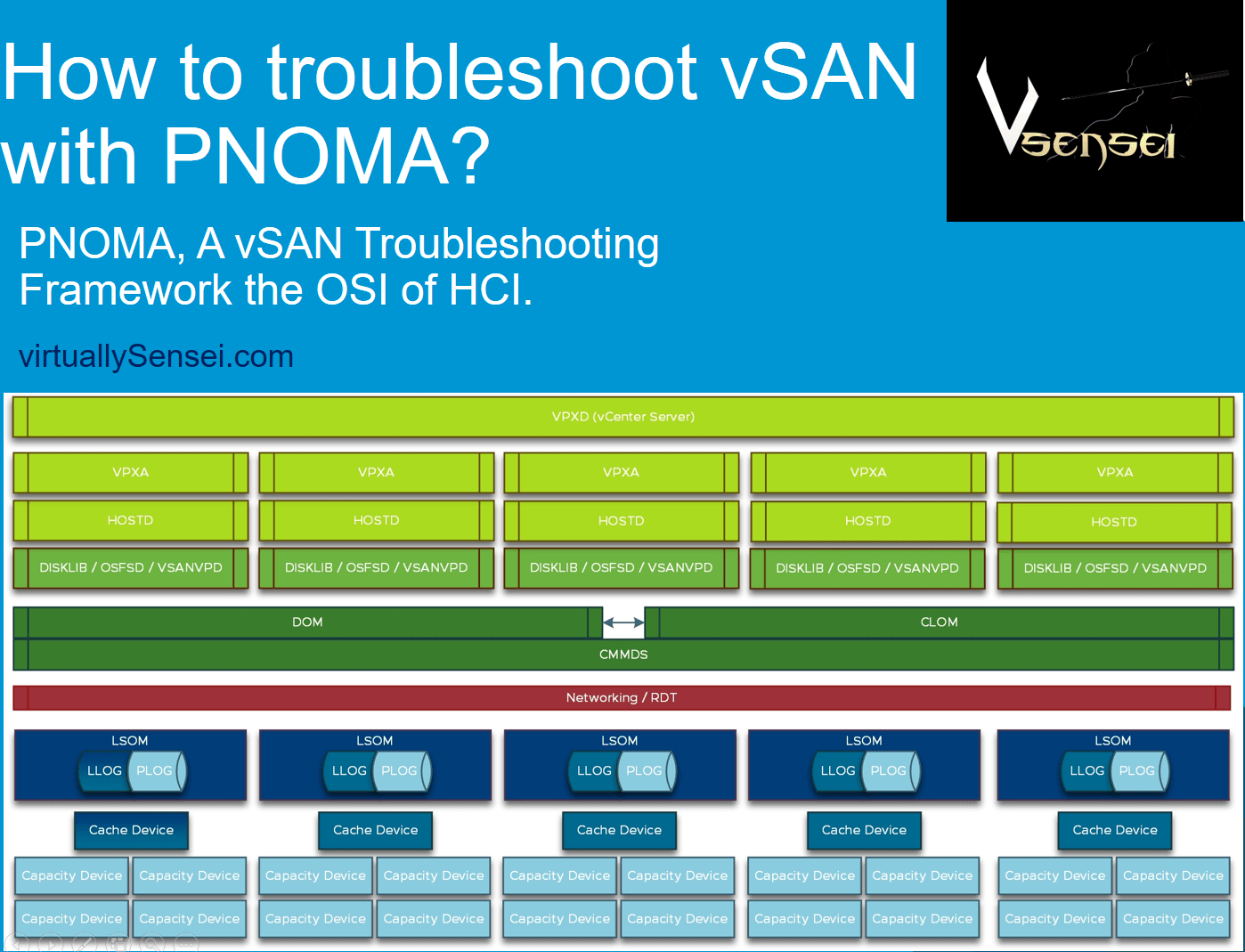

Here is a quick and easy guide to convert a normal vSAN cluster to a stretched cluster & converting a stretched cluster to a regular vSAN cluster .

Converting a Standard vSAN cluster to Stretched Cluster

I can categorize the tasks we will need to perform when we convert a normal cluster to a stretched cluster into three sections and I would also quote some of the examples where this will be your requirement in real life situation .

- Pre-requisite

- Steps for converting to stretched cluster

- Post conversion checks

Some of the Use Cases :

Consider that a business has grown and you are trying to stand up another co-location which is within 5 ms LTT between the sites , see LINK however you don’t want use this site as a Disaster-Recovery/ Fail-over site rather use this in an active-active configuration where users from both offices can run workload simultaneously on both sites . In such cases converting a regular vSAN cluster to a stretched cluster will be very useful .

In some cases where we have a physical limitation of server racks , lets say we have two server racks which hosts 4 Nodes per rack and we would like to withstand a rack failure , however we cant configure just a regular fault domain as we dont have a third rack to configure a regular cluster with fault domain . In such cases we can have a witness hosted on a different host/cluster/cloud have the two server rack put in two separate fault-domain to tolerate rack failures .

Pre-requisite :

- A backup of all running VM is a must as there is significant risk involved for the objects which back your virtual machines during this transition.

- Validate the cluster for all best practices and requirements for stretched cluster , do read : LINK-1 , LINK-2

- You have a different host/non-vSAN stretched cluster /cloud provider to host your witness appliance or witness host .

- You do not have any vSAN iSCSI targets configured on your current vSAN cluster , since vSAN iSCSI targets are not supported with vSAN-stretched cluster you will need to disable this , having vSAN iSCSI target enabled on stretched cluster can cause PSOD on the hosts part of the cluster .

- You will be loosing a significant amount of datastore space on the vSAN Datastore during this transition , make sure your cluster is not nearing its full capacity ensure 30% or high free space availability .

- To be able to remove and add the hosts to a different fault domain , you may have to re-apply policy on certain VMs whose storage policy might not comply when we are about to remove the hosts . It is recommended to change storage policy of few bunch of VMs at a time as there will resync operation involved here , changing policy on multiple VMs at a time might fill up the datastore since certain storage policy change will require double the amount of space consumed during the transition .

Steps for converting standard vSAN cluster to stretched cluster

You may be needing to physically disconnect one or more hosts from the cluster in-order to perform a re-location of the hosts physically to your other site .

Here in this example I have got 4 node vSAN cluster running vSAN 6.7 with vCenter server running 6.7 U1 , the steps used should be the similar for all version of vSAN cluster which supports stretched cluster .

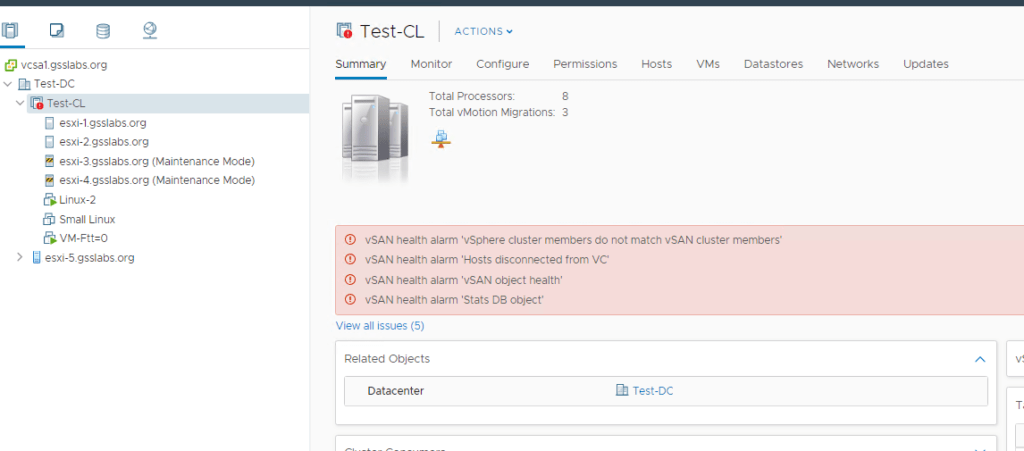

In my setup (These are nested ESXi hosts not physical ones) I would like to use esxi-1-1 and esxi-1-2 on primary/Preferred Site and esxi-3, esxi-4 on the Secondary site . I have added a witness appliance esxi-5 to the Datacenter as a host which is deployed on a different cluster.

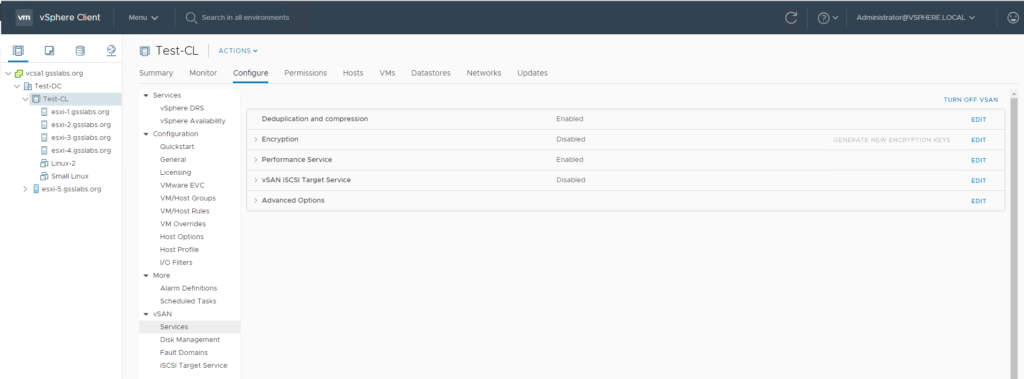

From the above screenshot we see that only dedup and compression was enabled on this cluster and vSAN iSCSI target service is not enabled .

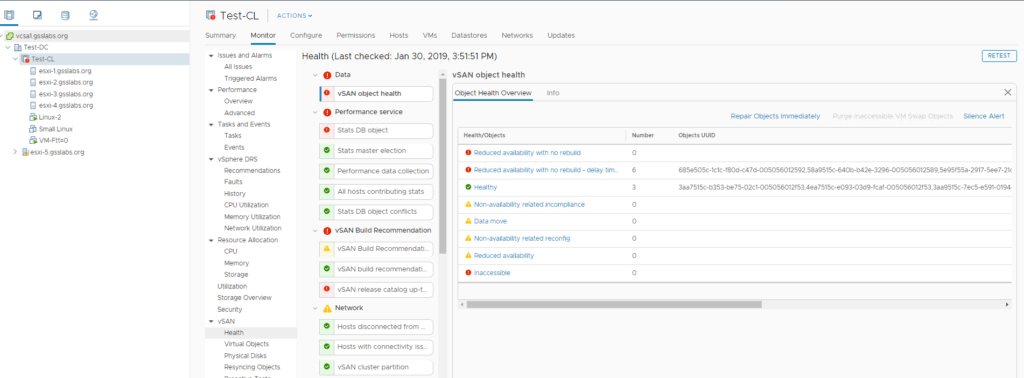

Step1: Before you start taking any actions , you will definitely need to make sure that all the VM storage policy are compliant and vSAN health reports no errors under “Data” section .

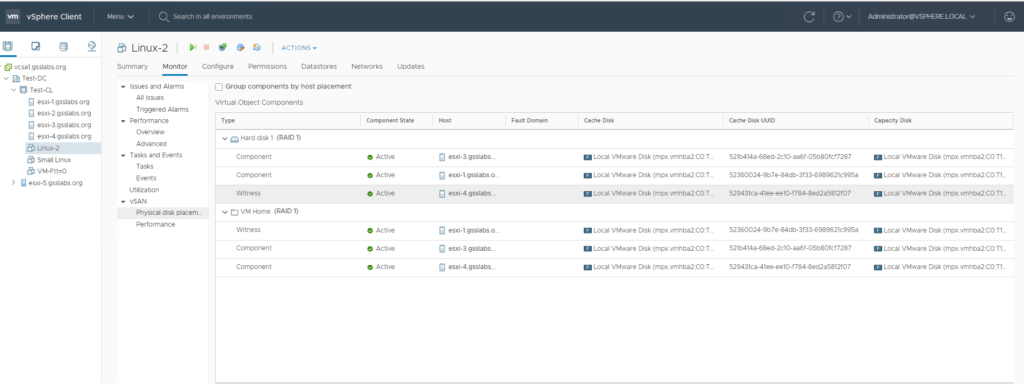

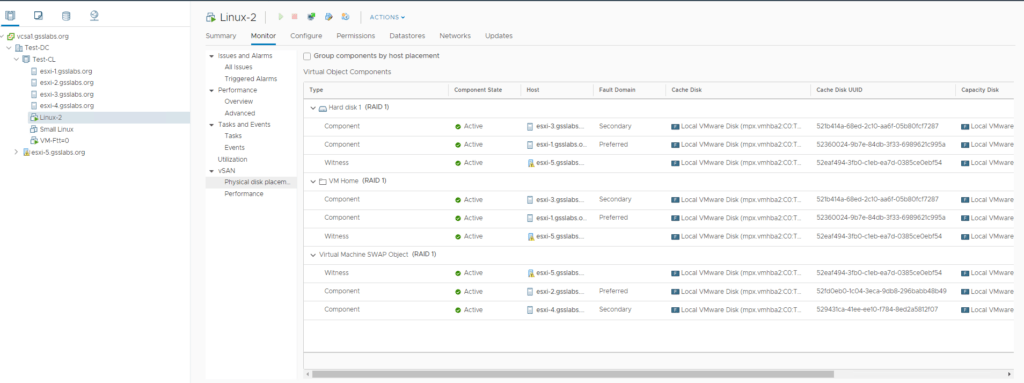

Lets look at the two VMs here and their placements to get an idea how the components are placed before we place the two hosts in maintenanceMode .

First VM : Linux-2 , has storage policy set to Ftt-1 (RAID-1) , where the components for VMDK and VM home folder are on hosts 1 , 3 and Host 4 .

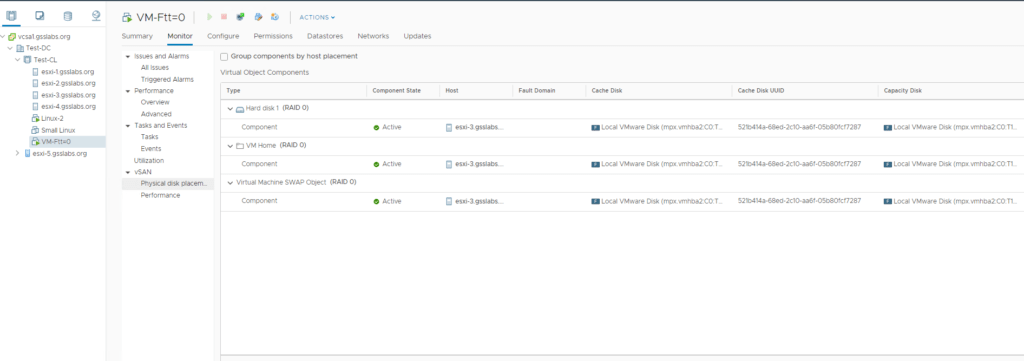

Second VM : VM-FTT-0 , has storage policy set to Ftt=0 (Raid-0) where the components for VMDK and VM home folder are on Host-03

Step2 : Place the host esxi-3 and esxi-4 in maintenance mode with ensure accessibility , which means that the VMs which are on this cluster will move to the hosts : esxi-1 and esxi-2 . Also if there were any objects which would go inaccessible without host esxi-3 and esxi-4 will be rebuilt onto hosts esxi-1 and esxi-2 (like Ftt-0 objects) before the hosts completely enter maintenance mode and later you may disconnect the hosts move them physically to the co location .

- Placed host-03 and host-04 in maintenance mode and look at the component placement .

From the above screenshots its clear that VMs which have FTT-1 will go non compliant as they dont have any more hosts to place the components and FTT-0 objects from the hosts which were put in maintenance mode have moved to the hosts which are still accessible .

Note: As explained in the pre-requisite if you have plans to keep 3 or more nodes on each side , you will retain certain amount of hosts and place the rest of the hosts in maintenance mode , however with vSAN if we have 3 or more hosts accessible , vSAN will try to rebuild absent components on to the hosts which are not in MM mode . This may be a good thing or can cause disaster in certain cases .

- If we have enough free space on the vSANdatastore after placing half of the cluster hosts in MM mode we should allow vSAN to rebuild absent components so that you can protection against failure of disks/diskgroups or even hosts .

- It will be a disaster when a huge resync triggers for the absent objects and we dont have enough space on the datastore to accommodate the resyncing objects , there will be no way to stop the resync once it triggers , we will need to add the hosts which were removed immediately back into cluster to avoid any sorts of downtime, allow resync to finish place the hosts which needs to move out back in maintenance mode with ensure accessibility and adjust the repair delay to a value which is larger than the time it would take for you to move the hosts to the other site and restart the clomd service for the value to immediately take effect .

Here in my case I only have two hosts which are still contributing to vSAN storage , hence vSAN wont start to rebuild any absent components since I dont have enough hosts to comply to the policy.

If we have more than 3 hosts still active and it will take me more than 2 days to bring other two hosts back in network , We will be needing to perform the following task to stop any resync triggers which can potentially cause a datastore fill-up .

Approximate time value for clomd which I need to set considering an extra day will be 3*24*60 = 4320 minutes .

So I will ssh to all the hosts and run the commands : esxcfg-advcfg -s 4320 /VSAN/ClomRepairDelay and /etc/init.d/clomd restart

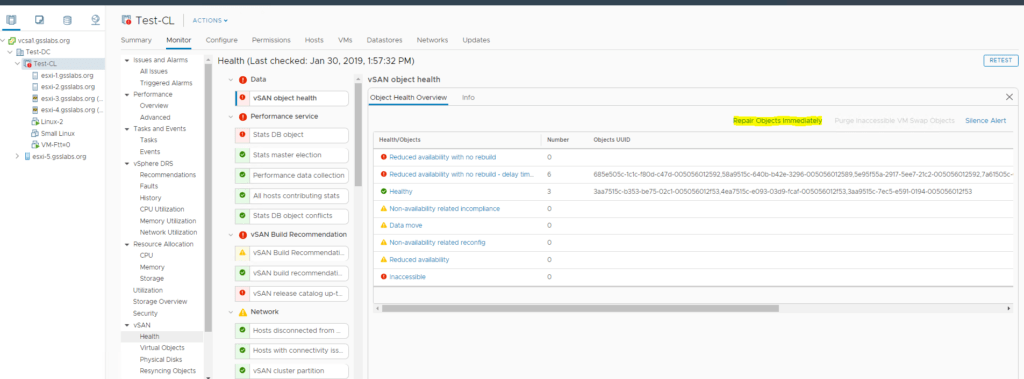

Assuming I have more than 3 nodes and I can still go complaint on the storage policy on all my VMs while the rest of the hosts are being moved to secondary site . I will force full re-protect all of my VMs soon after I place the required host in maintenance mode by clicking on repair objects immediately button under Cluster⇒ Monitor ⇒ vSAN⇒Health ⇒ Data ⇒ vSAN Object health , this will help me tolerate any additional failures within the cluster while we stand up rest of the hosts on the other site .

Step3 : The following tasks needs to be followed to complete stretched cluster conversion :

- Reconnect the hosts back to cluster , exit maintenance mode

- Configure fault domain and stretched cluster.

- Exit maintenance mode on the hosts which were added to secondary fault domain

- Repair objects immediately , review placement for confirmation

- If there were any non-critical VMs which were set to ftt-0 to address space crunch before configuring stretched cluster those VMs can be now re-protected with FTT-1 policy as we have regained available space on the vSAN datastore since the hosts are now contributing space to storage .

Converting a Stretched vSAN Cluster to a regular vSAN cluster

There may be situations where we need better space efficiency on vSAN datastore and stretched cluster is not working well , there may situations where we need vSAN iSCSI targets for windows failover clustering and sometimes will find the need of a separate host the will be unnecessary . In these situations a Stretched vSAN cluster can be covered to a regular vSAN cluster .

Pre-requisite :

- A backup of all running VM is a must as there is significant risk involved for the objects which back your virtual machines during this transition.

- Make sure all the VMs are compliant with their storage policy , make sure there is no ongoing resyncing objects , all vSAN health checks are clean.

- Read official page here

Steps for converting Stretched vSAN cluster to standard cluster

The following tasks needs to be followed to complete conversion of stretched cluster to standard vSAN cluster:

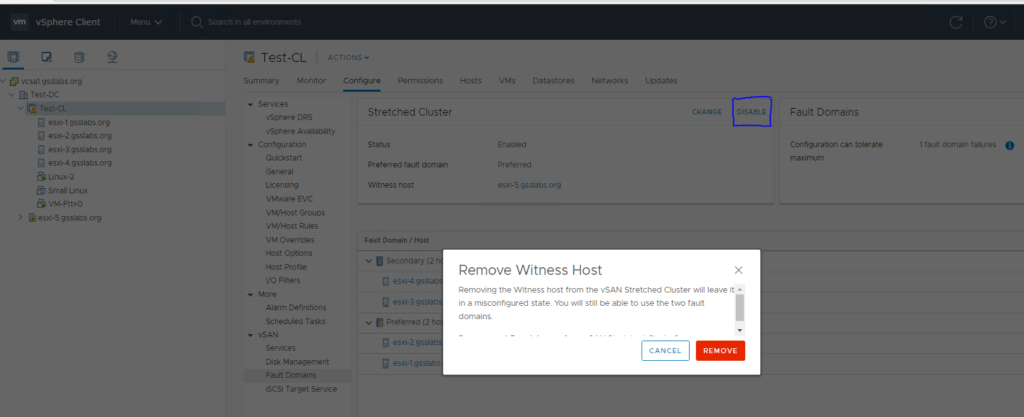

- Disable stretched cluster Cluster⇒Configure⇒vSAN⇒Fault Domains⇒Stretched Cluster⇒Disable.

- Destroy the fault domains.

- Repair all the objects immediately.

- Change the storage policy for few VMs at a time if required to achieve any additional space efficiency.** This step is optional

- Remove the witness node from inventory to avoid any confusion.** This step is optional

Thank you for taking time to understand process of converting standard cluster to stretched cluster and viceversa . Feel free to comment below if there were any questions .