Introduction

vSAN-ESA has introduced this concept of Storage Pools where all the disks in a given storage-pool contributes towards total capacity available for consumption. This initial release of vSAN ESA supports compression and encryption however doesn’t support Deduplication. vSAN ESA also introduced this concept of a log structured file System a.k.a LFS which I had discussed about briefly in my previous blog post, see My-take-on-ESA.

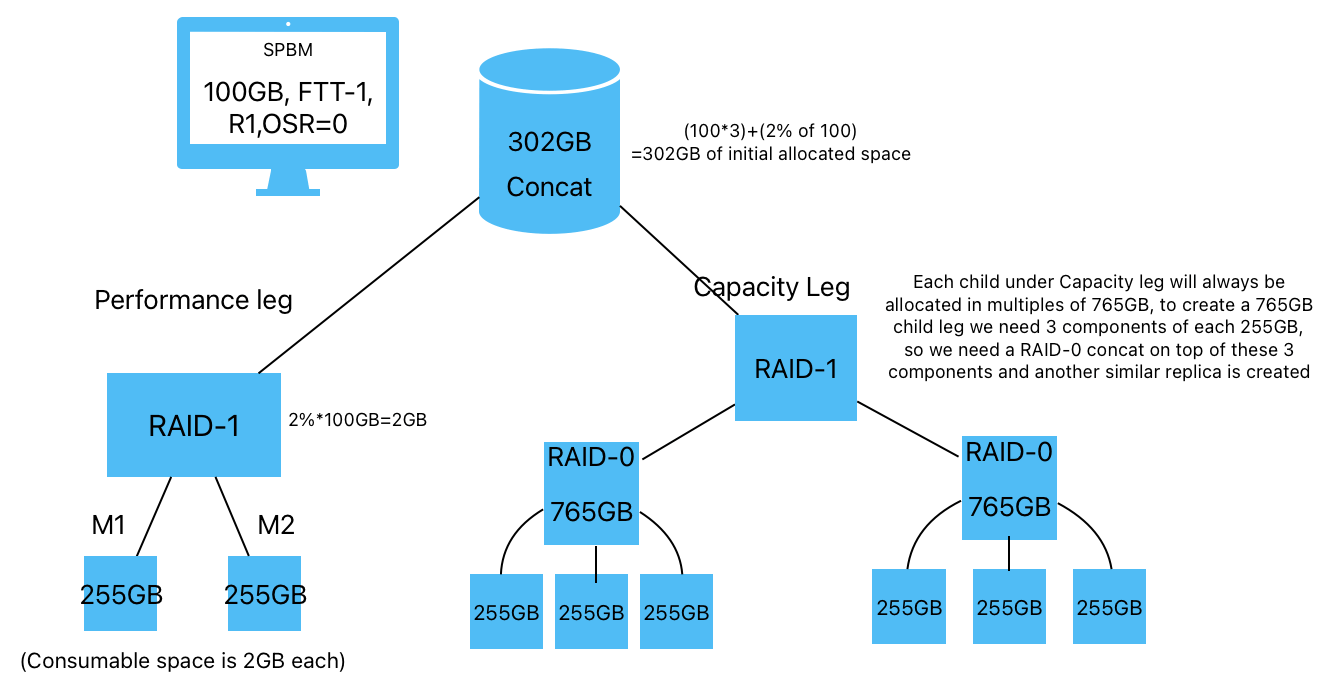

When creating objects on vSAN-ESA datastore, the initial allocated space for each object by default is 3X the size of the object + 2% of the Object Size.

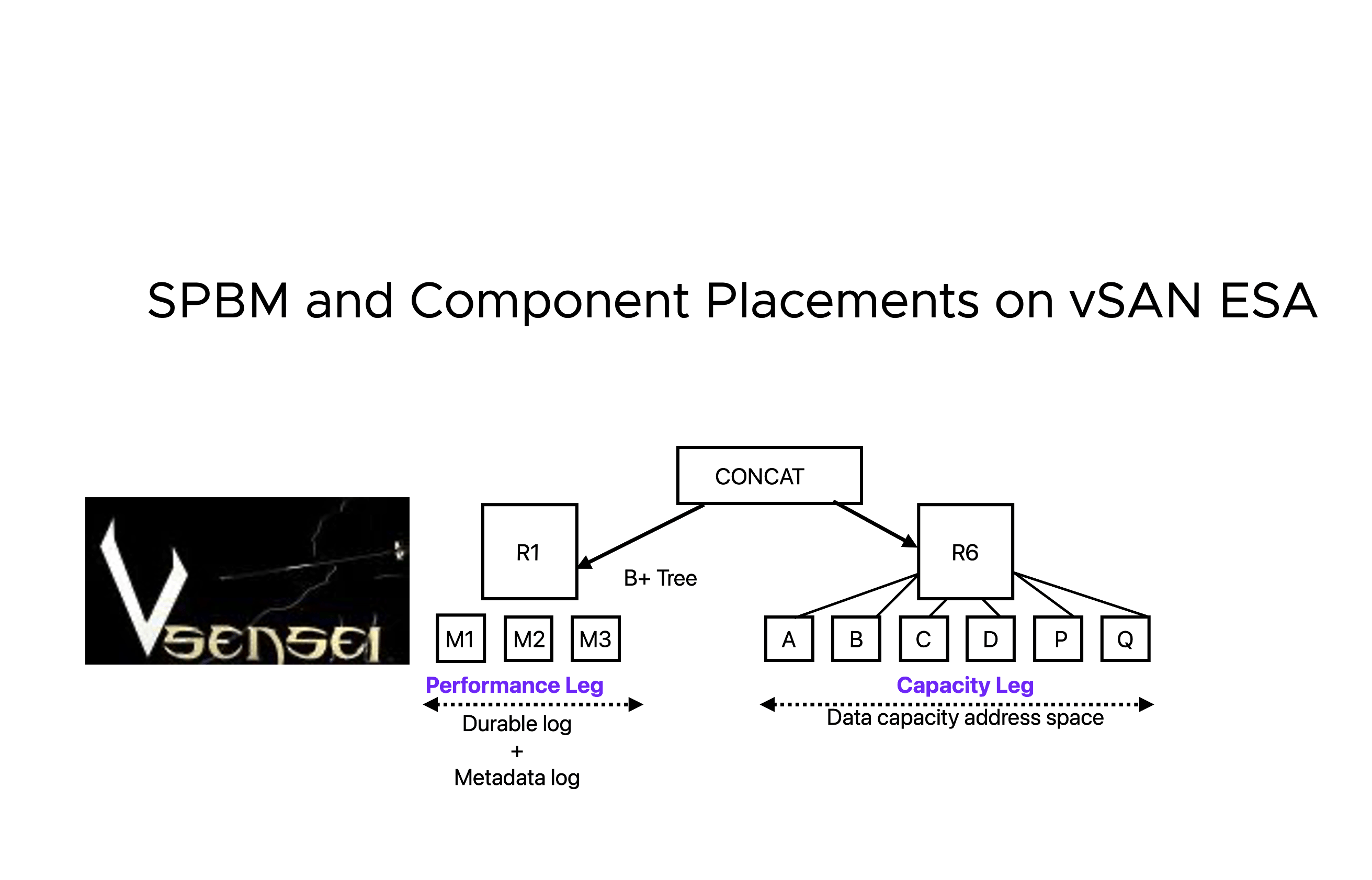

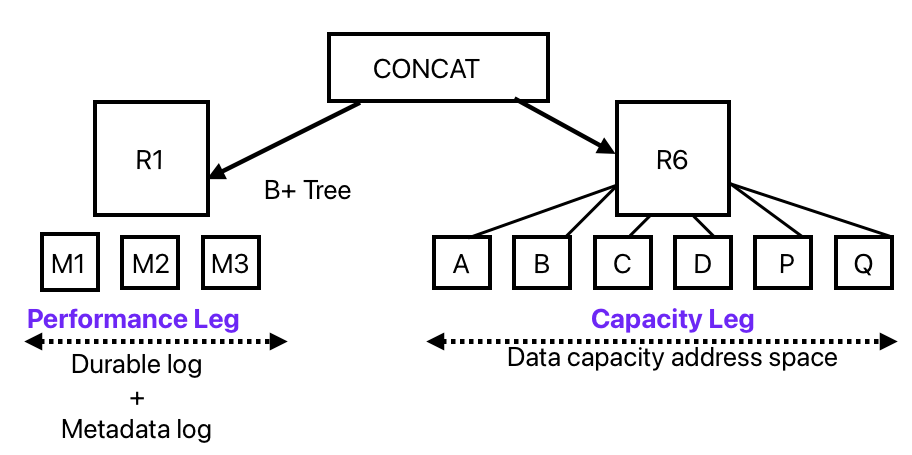

2% is used for the performance leg in the Object layout . If you would like to know more about performance leg and Capacity legs, I suggest you to read thru my previous blog on vSAN-ESA LFS.

The Capacity leg is allocated space is 3X the object size+ 2% inclusive, in addition every child under the Capacity leg is always created in multiple of 765 GB, depending on the size of the object many such child legs are created.Finally both performance leg and capacity legs are concatenated at the top.

Stripe Width on vSAN ESA

vSAN-ESA is immune to stripe-width definition, by default vSAN-ESA tries to provide a stripe-width of 2 and any definition higher than value of 2 will be ignored. The reason for this logic is there will be no performance benefits to the objects. Users are still allowed to define higher stripe width just because the SPBM UI is shared between vSAN-OSA and vSAN-ESA, a vCenter server could be managing one or more ESA and OSA clusters at the same time, hence the policy definition permits stripe width definition more than 2 .

Thick Provisioning on vSAN ESA

vSAN-ESA allows thick provisioning very similar to OSA. There are some myths circulating around this topic where thick provisioned objects provide better performance than thin provisioned objects, these claims are false. It is always recommended to use thin provisioning at all times and never use thick provisioning unless.

RAID-1 on vSAN ESA

vSAN-ESA allows users to create RAID-1 objects on 3 or more hosts in the cluster, number of host failure tolerance can be defined upto 3. Provisioned space for an Object with one host failure tolerance will be (3X Object Size + 2% of Object Size) x 2 mirrors.

Example if the object size is 100GB, the allocated space for this object will be (100*3)+(2% of 100)=302GB. Though it seems like we have over provisioned a lot of space, this object should not exceed total consumption of 202GB (100*2 + 2% of 100) unless there were snapshots created on this objects and if they grow more than provisioned space.

RAID-5 with 2+1, 3+1 , 4+1 hosts – ESA

vSAN-ESA also allows users to deploy VMs with a RAID-5 storage policy on just 3 nodes (2 data + 1 Parity),the layout remains the same even for 4 hosts (3+1) this gives significant space savings which is just 1.5x the size of the object. vSAN OSA could never allow users to create a RAID-5 object with just 3 nodes, a RAID-5 object on OSA needs minimum of 4 hosts and uses 1.33x size of the object. ESA also allows a different layout (4 Data + Parity)when you have 6 or more hosts in the cluster, a RAID-5 object with such layout only consumes 1.25x its size. Hence ESA provides better overall space savings with erasure coding.

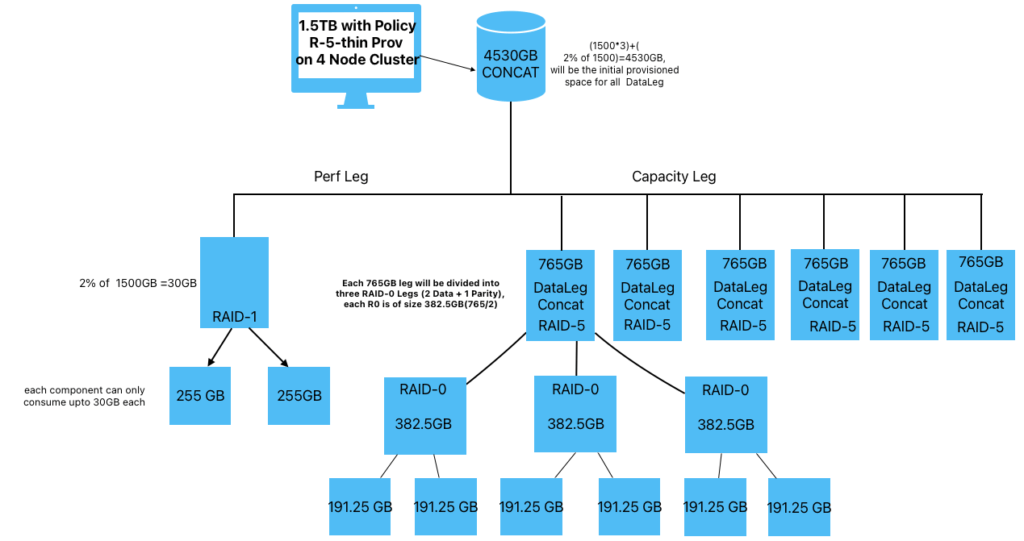

Example : 1.5TB object created with 4 hosts (3+1) layout with RAID-5 SPBM

In the above example the 1.5TB object can consume upto 2.25TB (1.5TB*1.5 RAID-5 overhead) when object consumes all the space without snapshots. If the user creates snapshots when the object is approaching its full capacity additional 765GB child legs are spawned to accomodate snapshot growth. You will also see that the third RAID-0 child which is for parity is allocated with the same size as the data legs, this is expected and its just that it wont consume actual space like the data legs.

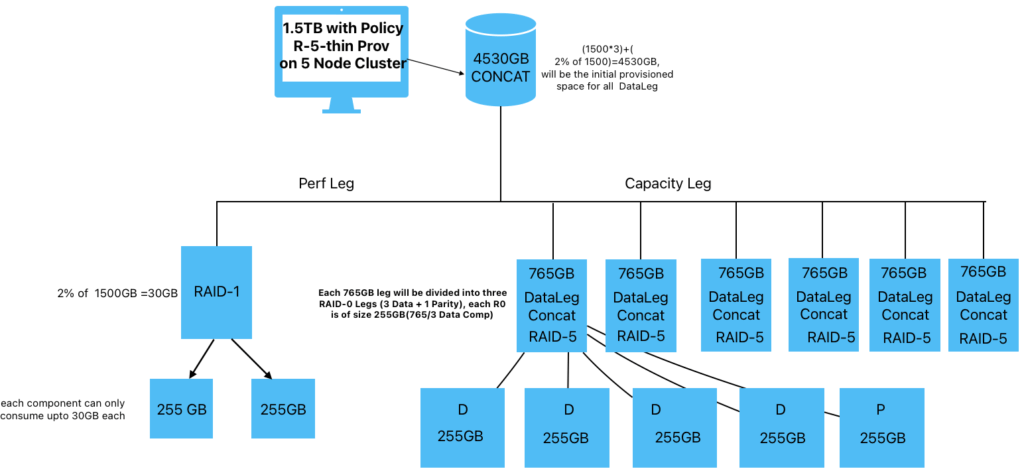

Example: 1.5TB object created with 6 hosts (4+1+1 spare) layout with RAID-5 SPBM

In the above example this object can consume upto 1.875TB (1.5TB *1.25 RAID-5 overhead) when space is fully consumed. If the user creates snapshots while the object is approaching its full capacity additional 765GB child legs are spawned to accomodate snapshot growth. The parity child P under the 765GB leg is allocated with the same size as data legs however it will be only used to write parity data.

When there is a host failure or when a cluster is downsized from 4+1 to a 3+1 layout or 2+1 layout , objects are automatically reconfigured adaptively, you might want to read thru “Adaptive RAID-5” section on the official VMware blogs.

RAID-6 with 6 or more Hosts – ESA

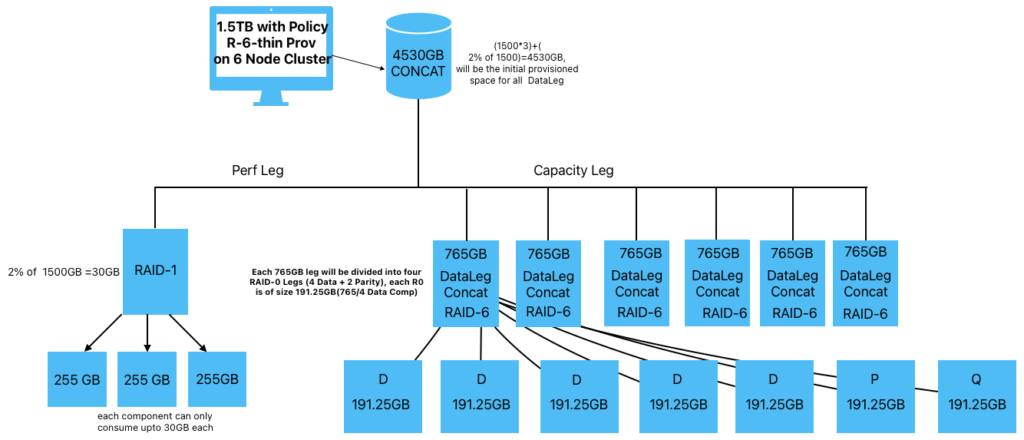

RAID-6 behaviour on vSAN ESA is same as OSA , which means any object created on vSAN-ESA will consume 1.5x the size of the object. The performance leg will have a total of 3 mirrored component in order to tolerate 2 host failure. Each Capacity leg child of 765GB will be constituted of 6 components of size 191.25GB (765/4) as we will have 4 data components and two parity components. These parity components will have the same allocated space of 191.25GB however will only consume space for parity. If no snapshots were created on the object this could grow upto 2.25TB (1.5TB*1.5 RAID-6 overhead) similar to OSA.

Example: 1.5TB object created with 6 hosts (4+2) layout with RAID-6 SPBM