I highly encourage you to check out my previous blog to understand how vSAN encryption works before you jump into troubleshooting problems with vSAN and a KMS server .

This blog should give you a general approach towards troubleshooting problems with vSAN-encryption and also be able to look at a specific log location in order to isolate and fix a specific problem . I have also included certain test-case scenario to see how the logging looks on the host when there is an issue with vSAN-encryption .

Preliminary checklist

- Content for checklist is available here at : Checklist

Check KMS connectivity

As discussed in the previous introduction blog , it is highly critical to note that the KMS server should NOT be deployed on the same cluster which is being encrypted which will lead to a catch-22 situation . Take a look the file “esx.conf” which should be under /etc/vmware/ and grep kmip to view KMS configuration on the ESXi host if the KMS was configured with FQDN , then it is necessary for the host to be able to resolve the KMS server thru its FQDN . It is okay to have VC down in all scenario as long as the KMS is reachable for the host .

[root@enc-h1:~] grep kmip /etc/vmware/esx.conf /vsan/kmipServer/child[0001]/old = "false" /vsan/kmipServer/child[0001]/port = "5696" /vsan/kmipServer/child[0001]/address = "hyt-s.vsensei.local" /vsan/kmipServer/child[0001]/name = "hyt-Secondary" /vsan/kmipServer/child[0001]/kmipClusterId = "vSAN-Encryption" /vsan/kmipServer/child[0001]/kmskey = "vSAN-Encryption/hyt-Secondary" /vsan/kmipServer/child[0000]/kmskey = "vSAN-Encryption/hyt-primary" /vsan/kmipServer/child[0000]/kmipClusterId = "vSAN-Encryption" /vsan/kmipServer/child[0000]/name = "hyt-primary" /vsan/kmipServer/child[0000]/address = "hyt-p.vsensei.local" /vsan/kmipServer/child[0000]/port = "5696" /vsan/kmipServer/child[0000]/old = "false" /vsan/kmipClusterId = "vSAN-Encryption" [root@enc-h1:~]

In the above output we see that we have two KMS server configuration pushed from VC to Hosts which are “hyt-p.vsensei.local” and “hyt-s.vsensei.local” and port used for connecting to KMS is 5696 .

Confirm if we have the right KMIP certificates

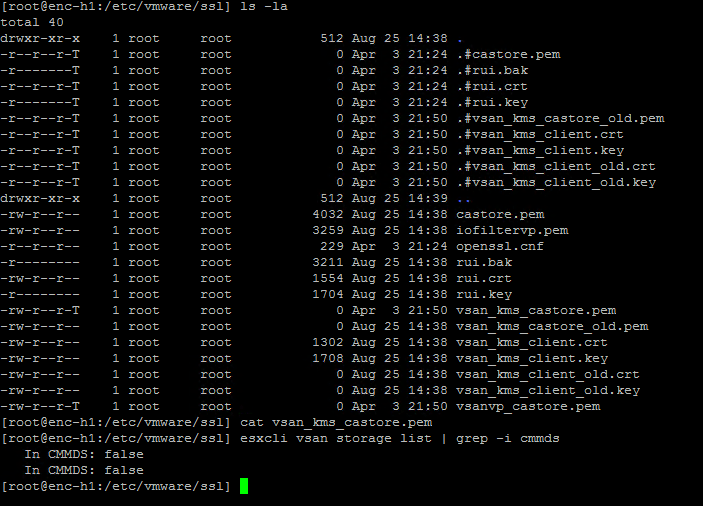

Navigate to the path /etc/vmware/ssl on one of the hosts which is part of the vSAN-encrypted cluster and check the certificates on the files vsan_kms_client.crt (client Certificate) , vsan_kms_client.key (private key)and vsan_kms_castore.pem . The vsan_kms_castore.pem file is a copy of the server certificate that the host uses to compare with the cert returned by the KMIP server during initial SSL handshake. If the server cert has been changed and does not match what ESXi has stored here, the connection will not be established.

Here is how you can compare and confirm if we have the right certificates and keys on the respective files we have on the hosts .

[root@enc-h1:/etc/vmware/ssl] ls -la *kms* -rw-r--r-T 1 root root 2726 Aug 24 02:30 vsan_kms_castore.pem -rw-r--r-T 1 root root 0 Aug 24 02:38 vsan_kms_castore_old.pem -rw-r--r-T 1 root root 1302 Aug 24 02:30 vsan_kms_client.crt -rw-r--r-T 1 root root 1708 Aug 24 02:30 vsan_kms_client.key -rw-r--r-T 1 root root 0 Aug 24 02:38 vsan_kms_client_old.crt -rw-r--r-T 1 root root 0 Aug 24 02:38 vsan_kms_client_old.key

From , the above list of files , the first file we will need to look at is “vsan_kms_client.crt” this KMS client key should match the KMS-Client-Certificate which was issued by the KMS server to the vcenter server , if you dont have the file locally to compare the key you may choose to re download pem file from the KMS server .

Now cat the file “vsan_kms_client.crt” on the ESXI host and compare it with first half of the file which contains client certificate here its “vcenter.pem” which we downloaded from the KMS server .

Cat the next file “vsan_kms_client.key” , this should match with the private key on the same PEM file which was downloaded .

Next up is “vsan_kms_castore.pem” , this file contains server side certificate from the KMS server , the contents /certificates in this file depends on the total number of KMS servers with which the ESXi hosts are connected , in my current example here we have two KMS servers (primary and secondary ) , hence we should have two certs one from each KMS server .

Log in to Hy-Trust (KMS used in my lab) ⇒ Clusters⇒ Select-first-KMS⇒ View Current Certificate , this certificate must match the content on one of the certificates within “vsan_kms_castore.pem” file , similarly the other certificate on the same file must match the server certificate on the secondary KMS server .

From the above screenshots we can see that all certificates stored on the hosts on respective files matches certificates issued/stored on the KMS servers in question . If vCenter is available and the host is missing any of this information, vCenter will provide the host with copies of the certificates it has stored in VECS. If the vcenter server is down we should be able to manually copy the contents from the KMS server on to those respective files which should fix/resolve certificate mismatches on the hosts .

Ensure that host can enter crypto-safe mode

See content for details around host crypto safe mode here

To determine if a HostKey has been installed (i.e. the host is crypto-safe), you can use the UI (if available).Select the host in the inventory and go to Configure ⇒Security Profile ⇒Host Encryption Mode

All additional details from krypto-safemode is avaialable here

Certificate related issues

If we were missing any of the file which contains certificate data under /etc/vmware/ssl (vsan_kms_castore.pem , vsan_kms_client.crt , vsan_kms_client.key ) you will see errors relating to certificates on the same syslog.log file . To simulate this problem I simply placed host-1 in MM Mode and deleted the file vsan_kms_castore.pem under /etc/vmware/ssl and rebooted the host .

After I rebooted the host , we see that the file “vsan_kms_castore.pem” was recreated , however was of 0 bytes with content inside it . Hence the Disk group on this did not mount over the reboot , lets check the logs for error messages .

From syslog.log :

2018-08-25T14:38:17Z jumpstart[2097463]: VsanUtil: Get kms client key and cert, old:0 2018-08-25T14:38:17Z jumpstart[2097463]: VsanUtil: GetKmsServerCerts KMS certs not found 2018-08-25T14:38:17Z jumpstart[2097463]: VsanInfoImpl: Joining vSAN cluster 5232826c-507e-1f30-197d-605f6d44d413 2018-08-25T14:38:17Z jumpstart[2097463]: VsanInfoImpl: SyncConfigurationCallback called 2018-08-25T14:38:17Z jumpstart[2097463]: VsanSysinfo: Loading module cmmds 2018-08-25T14:38:22Z jumpstart[2097463]: VsanInfoImpl: Retrieving the host key with keyId: b2385c26-a745-11e8-bbd4-005056b85466 2018-08-25T14:38:22Z jumpstart[2097463]: VsanUtil: Get kms client key and cert, old:1 2018-08-25T14:38:22Z jumpstart[2097463]: VsanUtil: GetKmsServerCerts Old KMS certs not found 2018-08-25T14:38:22Z jumpstart[2097463]: VsanInfoImpl: Failed to retrieve host key from KMS: Invalid key or certs. Will have 1 retries. 2018-08-25T14:38:27Z jumpstart[2097463]: VsanUtil: Get kms client key and cert, old:1 2018-08-25T14:38:27Z jumpstart[2097463]: VsanUtil: GetKmsServerCerts Old KMS certs not found 2018-08-25T14:38:27Z jumpstart[2097463]: VsanInfoImpl: Failed to retrieve host key from KMS: Invalid key or certs. Will have 0 retries. 2018-08-25T14:38:27Z jumpstart[2097463]: VsanInfoImpl: Failed to load DEKs: Invalid key or certs

From Boot.gz file post reboot , we see that the disks were NOT announced post reboot as we could not retrieve the right key file from host cache as host cache itself could not load the keys from “vsan_kms_castore.pem“

[root@enc-h1:/var/log] vdq -i

[

{

"SSD" : "naa.6000c295967afd2c6e6f2a7a862bf998",

"MD" : [

"naa.6000c29b928af4bfa3fc109492da454e",

]

},

]

[root@enc-h1:/var/log] zcat boot.gz | grep -i "naa.6000c295967afd2c6e6f2a7a862bf998"

2018-08-25T14:38:31.474Z cpu1:2097464)PLOG: PLOG_InitDevice:276: Initialized device M naa.6000c295967afd2c6e6f2a7a862bf998:1 0x430abd085fb0 quiesceTask 0x430abd087230 on SSD 524e53e9-7e08-30e6-61c0-017bc8a01ad0deviceUUID 00000000-0000-0000-0000-00000000$

2018-08-25T14:38:31.474Z cpu1:2097464)WARNING: PLOG: PLOGValidateDisk:2867: Not announcing disk naa.6000c295967afd2c6e6f2a7a862bf998:1 until key is available. Failed obtaining it with status Not found.

2018-08-25T14:38:37.244Z cpu1:2097464)PLOG: PLOGProbeDevice:5728: Probed plog device <naa.6000c295967afd2c6e6f2a7a862bf998:2> 0x430abd0844d0 exists.. continue with old entry

2018-08-25T14:38:37.247Z cpu1:2097464)PLOG: PLOGProbeDevice:5728: Probed plog device <naa.6000c295967afd2c6e6f2a7a862bf998:1> 0x430abd085fb0 exists.. continue with old entry

2018-08-25T14:38:37.247Z cpu1:2097464)WARNING: PLOG: PLOGValidateDisk:2867: Not announcing disk naa.6000c295967afd2c6e6f2a7a862bf998:1 until key is available. Failed obtaining it with status Not found.

[root@enc-h1:/var/log] zcat boot.gz | grep -i "naa.6000c29b928af4bfa3fc109492da454e"

2018-08-25T14:38:31.475Z cpu1:2097464)PLOG: PLOG_InitDevice:276: Initialized device M naa.6000c29b928af4bfa3fc109492da454e:1 0x430abd087720 quiesceTask 0x430abd0889a0 on SSD 524e53e9-7e08-30e6-61c0-017bc8a01ad0deviceUUID 00000000-0000-0000-0000-00000000$

2018-08-25T14:38:31.475Z cpu1:2097464)WARNING: PLOG: PLOGValidateDisk:2867: Not announcing disk naa.6000c29b928af4bfa3fc109492da454e:1 until key is available. Failed obtaining it with status Not found.

2018-08-25T14:38:31.475Z cpu1:2097464)PLOG: PLOG_InitDevice:276: Initialized device D naa.6000c29b928af4bfa3fc109492da454e:2 0x430abd088ef0 quiesceTask 0x430abd08a170 on SSD 524e53e9-7e08-30e6-61c0-017bc8a01ad0deviceUUID 00000000-0000-0000-0000-00000000$

2018-08-25T14:38:37.248Z cpu1:2097464)PLOG: PLOGProbeDevice:5728: Probed plog device <naa.6000c29b928af4bfa3fc109492da454e:1> 0x430abd087720 exists.. continue with old entry

2018-08-25T14:38:37.248Z cpu1:2097464)WARNING: PLOG: PLOGValidateDisk:2867: Not announcing disk naa.6000c29b928af4bfa3fc109492da454e:1 until key is available. Failed obtaining it with status Not found.

2018-08-25T14:38:37.248Z cpu1:2097464)PLOG: PLOGProbeDevice:5728: Probed plog device <naa.6000c29b928af4bfa3fc109492da454e:2> 0x430abd088ef0 exists.. continue with old entry

[root@enc-h1:/var/log]

Next , I tried couple of things , which worked successfully . I had a copy of the key on a notepad , I could simply write to the same file , restarted the management agents and it successfully remounted the disk group , otherwise we can download the same file from a working host copy to the affected host and restart all the management services to get the DG mounted . To make this even simpler as I still had the vcenter in a managed state , I moved the host out of the cluster and moved it back , VC successfully replaced the file and restarting management agents got the DG mounted .

Note*: Moving the host out of the cluster and moving it back only works if VC was healthy and had full control over the affected host , i.e “vCenter state is authoritative” on the host . Disconnecting the host moving it out of the cluster and moving it back will not replace the file .

Once Host moved back in you will notice recent tasks , which updated the vSAN configuration on the all the host including “enc-h1.vsensei.local” which was moved back in . The file gets successfully replaced and disk group gets mounted successfully just after moving it back into cluster , exiting MM mode will reflect correct size of the vSAN datastore .

[root@enc-h1:~] esxcli vsan storage list | grep -i cmmds In CMMDS: true In CMMDS: true

[root@enc-h1:~] df -h (Before Exiting MM Mode) Filesystem Size Used Available Use% Mounted on vsan 24.0G 2.4G 21.6G 10% /vmfs/volumes/vsanDatastore [root@enc-h1:~] df -h (After exiting MM Mode ) Filesystem Size Used Available Use% Mounted on vsan 32.0G 3.2G 28.8G 10% /vmfs/volumes/vsanDatastore

Note* : In certain conditions even if the file is recreated and moved back in , the DiskGroup may not be mounted , a manual DG mount after restarting services also may not help . Its good to reboot the host and disks should get announced successfully post boot up .

KEY error codes to note :

| Error code | Meaning |

|---|---|

| QLC_ERR_COMMUNICATE | Failed to communicate to Key Management Server |

| QLC_NEED_AUTH | User authencication failed to Key Management Server |

| QLC_ERR_TIMEOUT_EXPIRED | Connection timeout to Key Management Server |

Please Make sure to read thru :

https://thevirtualpaddy.wordpress.com/2018/07/11/troubleshooting-vsan-encryption-and-kms-connectivity/ for all additional troubleshooting sections for vSAN and VM encryption

Excellent write-up Hareesh! This will be one that I will keep bookmarked should any customers I come across experience this.