vSAN 7 U1 brought in significant changes to its method of creating components especially for objects which are larger than 255GB. This change was brought in adapt to minimum slack space requirements which is significantly lower than all previous versions of vSAN.

I highly encourage you to read thru blog articles “Understanding vsan Objects and Components” and “Understanding VM-Storage-Policies” as the basics about object/component placement are discussed in those specific blogs.

Lets first discuss the changes which were brought in with vSAN 7 U1 for Slack Space requirement and later understand how the component placement changes with vSAN 7 U1, further understand how this is helping in reducing Slack Space requirements.

What is Slack Space or transient capacity?

•Transient Capacity is generated when an object is reconfigured by VSAN

•Re configuration could be user triggered (EMM/Policy Change) or VSAN triggered (Repairs/Rebalancing)

•A policy change on a 255 GB object with the original policy FTT1, SW=1 and target policy FFT1, SW=2 will result in 510 GB worth of transient capacity.

•This Becomes Challenging when the VM or Object sizes are very large example if you want to change a storage policy of VM/VMDK of 10TB from RAID-1 to RAID-5 we will need free space equivalent to ~20TB to perform this policy change with older vSAN Transient Capacity requirement.

What changed in vSAN 7 U1 for transient capacity?

On the current release vSAN 7 U1 and later, CLOM follows a different layout for object creation by having a “Concat Object” at the very top of the tree and creates multiple legs depending on the RAID policy that is chosen for the objects and ensures that each of these RAID-legs do not contain components larger than (255*2GB) in size. So when any policy changes are made

CLOM does not have to resync the entire object in one shot. It starts by working on each RAID-leg, hence the resync that is triggered for each leg is no more than (255*RAID-Factor)GB, per leg. RAID-Factor is 2 in most of the cases.

CLOM now will get the flexibility of working on each leg of the CONCAT independently, it will continue to create work-items to resync multiple legs at a time not more than (255*RAID-Factor)GB.

If the cluster runs out of transient space all remaining resync work-items are throttled at CLOM, pending resync will be shown under “Scheduled Resync” in the UI, once transient space is recovered after completing on going resync, it will starts to process other work-items.

New UI in vSAN 7 U1 to shows if policy change is ”Pending/In progress/ Failed” state

•REDUCED_AVAILABILITY_WITH_POLICY_PENDING

•REDUCED_AVAILABILITY_WITH_POLICY_PENDING_FAILED

•NON_HFT_RELATED_INCOMPLIANCE_WITH_POLICY_PENDING •NON_HFT_RELATED_INCOMPLIANCE_WITH_POLICY_PENDING_FAILED

This new method of component placement is helping us by reducing transient/slack space requirement for storage policy changes and for failure handling at the cluster level by working on smaller subset of components (One leg at a time).

Reserved Capacity based on deployment variables in vSAN 7 U1:

•12 node cluster = ~18%

•24 node cluster = ~14%

•48 node cluster = ~12%

Understanding Component Placement in vSAN 7 U1 and above

As discussed in the section “What changed in vSAN 7 U1 for transient capacity?“, we do not see any difference for VMs and objects which are smaller than 255GB, this change policy applies to the objects that are larger than 255GB. If you are currently on vSAN 7.0 GA or lower release, you will need to perform something called as “Object format conversion” after completing the upgrade and On-Disk-Format upgrade, you will be provided with a warning on vSAN-Health plugin to validate if you have enough capacity in the cluster to convert a few objects to the newer layout.

Lets now Look at different Object layout with different Storage Policy in place.

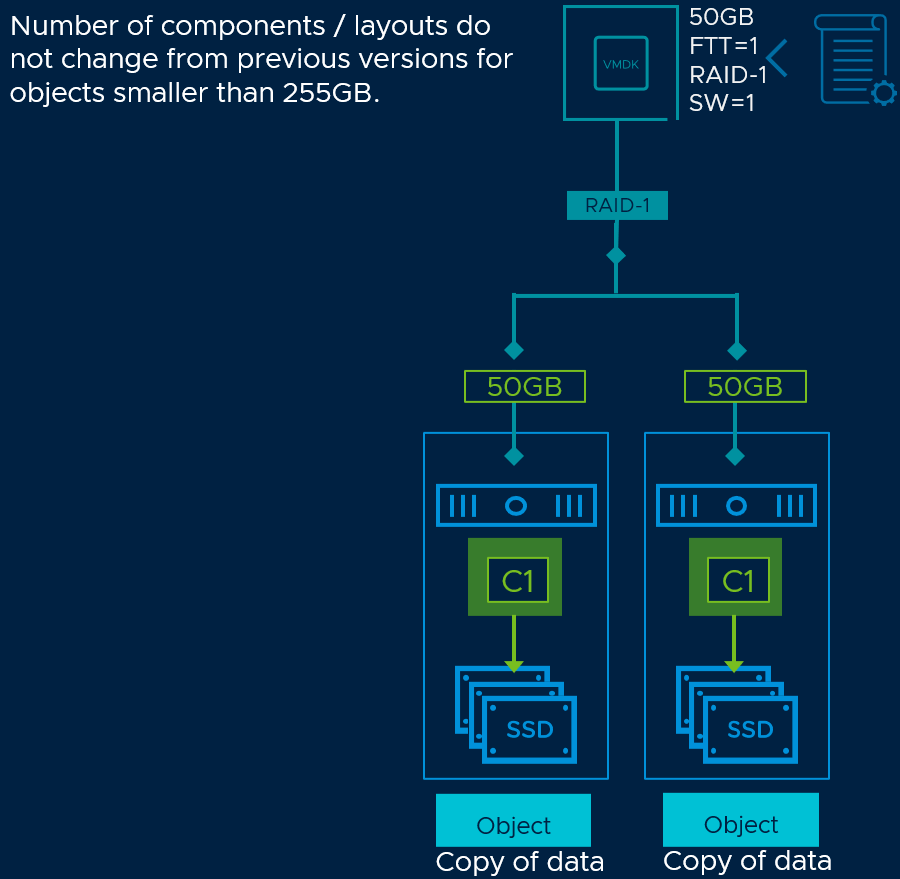

50 GB object with FTT-1,RAID-1 and Stripe Width of 1

There is no difference in this example of a 50GB VMDK as mentioned in the earlier section, the change is only seen with Objects larger than 255GB.

50GB Object with FTT-1,RAID-1 and Stripe Width of 3.

50GB VMDK with FTT-3,RAID-1 and Stripe Width of 1

500GB VMDK with FTT-1,RAID-1 and Stripe width of 1

In this Example of a 500GB object we see that it created a CONCAT tree at the top, created two RAID-1 Legs, each RAID-1 Leg have two components each. So if there were to be a policy change in the further for this VMDK, CLOM will work with each leg individually, the policy change will first resync the first RAID-1 Tree (255*2)GB to the new layout defined by the user, later moves to resync the second RAID-1 tree (245*2)GB to the new layout, as mentioned earlier CLOM is allowed perform resync for multiple legs concurrently as long as cluster has enough transient space available.

500GB VMDK with FTT-1,RAID-1 and Stripe width of 3

As mentioned in the picture number CLOM has decided to create RAID-1 Trees with two RAID-0 sets on each leg. First set of RAID-0 group have four components each with 85 GB and the second set of RAID-0 group with ~81.6GB. Each RAID-1 is less than or equal to (255*2=510GB).

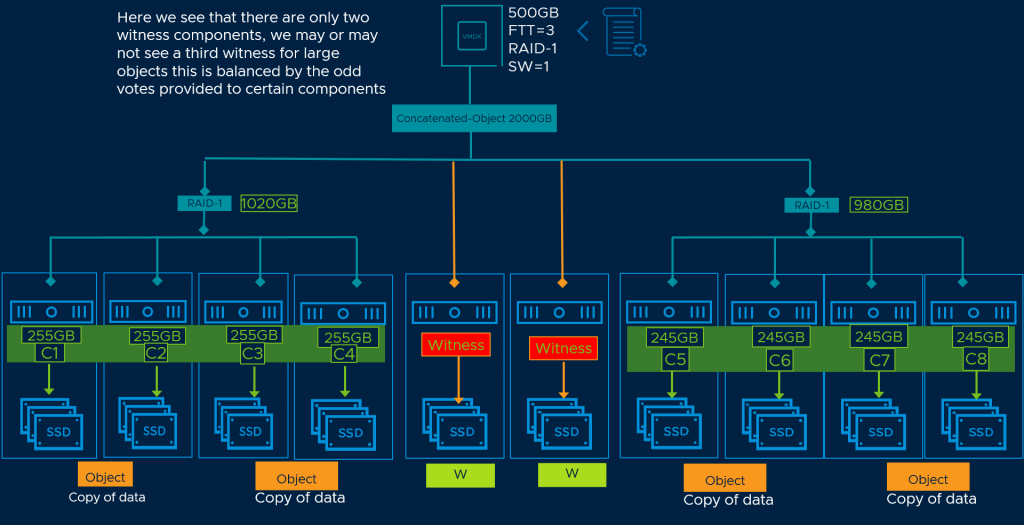

500GB VMDK with FTT-3,RAID-1 and Stripe width of 1

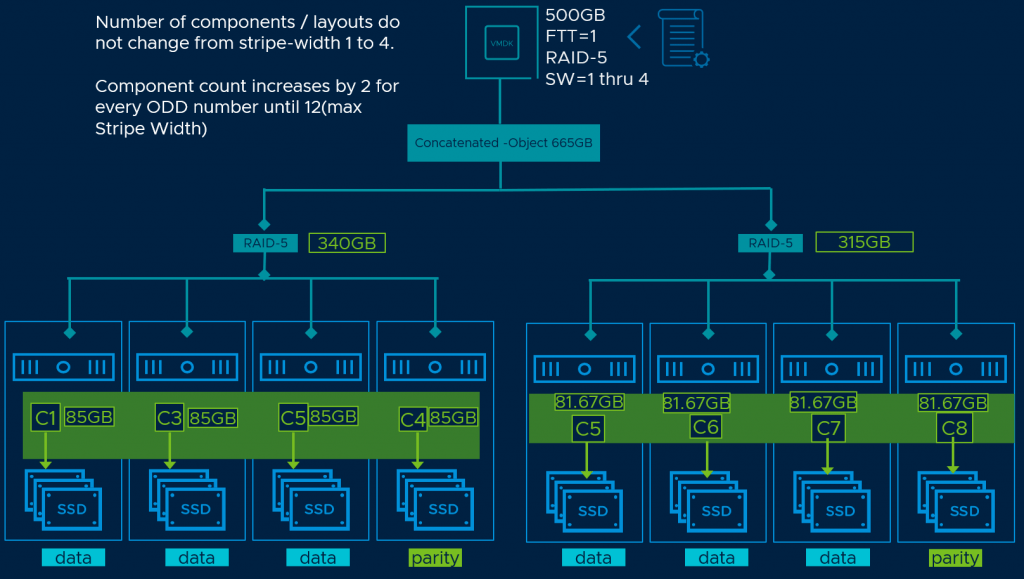

500GB VMDK with FTT-1,RAID-5 and Stripe width of 1

FTT-1 Objects with RAID-5, stripe width definition between 1 and 4 will always create four components on each tree. We will see a two component increase for every odd number of stripe width meaning SW-5 and SW-6 will have 6 components on each RAID Tree, SW=11 and SW=12, will have 12 components on each RAID Tree.

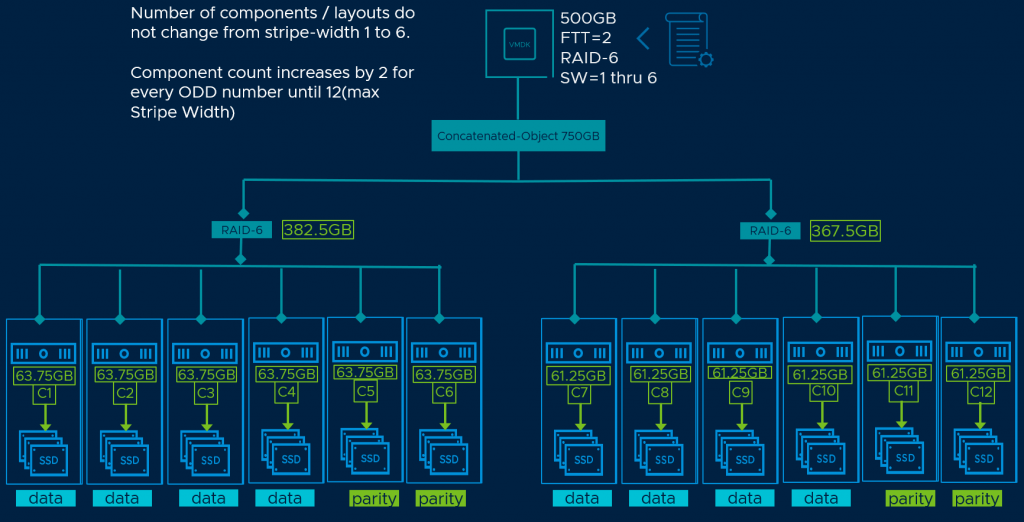

500GB VMDK with FTT-2,RAID-6 and Stripe width of 1

FTT-2 Objects with RAID-6, stripe width definition between 1 and 6 will always create six components on each tree. We will see a two component increase for every odd number of stripe width meaning SW-7 and SW-8 will have 8 components on each RAID Tree, SW=11 and SW=12, will have 12 components on each RAID Tree.

Creating pictorial depiction for all types of storage policy gets really complex. I have attached this file “Sample_storage_policy.txt”, which contains object layout for various different storage policies if you want to understand how placements will look for different kind of storage policies and stripe width.

We also have an official blog written around storage policies for VMC-AWS on vSAN, take a look at “Improved Storage Policy Capacity Management“, want to learn about other interesting features on vSAN-7 U1 see LINK.